Vincent Sanders: I'll huff, and I'll puff, and I'll blow your house in

Sometimes it really helps to have a different view on a problem and after my recent writings on my Public Suffix List (PSL) library I was fortunate to receive a suggestion from my friend Enrico Zini.

I had asked for suggestions on reducing the size of the library further and Enrico simply suggested Huffman coding. This was a technique I had learned about long ago in connection with data compression and the intervening years had made all the details fuzzy which explains why it had not immediately sprung to mind.

Huffman coding named for David A. Huffman is an algorithm that enables a representation of data which is very efficient. In a normal array of characters every character takes the same eight bits to represent which is the best we can do when any of the 256 values possible is equally likely. If your data is not evenly distributed this is not the case for example if the data was english text then the value is fifteen times more likely to be that for e than k.

Huffman coding named for David A. Huffman is an algorithm that enables a representation of data which is very efficient. In a normal array of characters every character takes the same eight bits to represent which is the best we can do when any of the 256 values possible is equally likely. If your data is not evenly distributed this is not the case for example if the data was english text then the value is fifteen times more likely to be that for e than k.

So if we have some data with a non uniform distribution of probabilities we need a way the data be encoded with fewer bits for the common values and more bits for the rarer values. To be efficient we would need some way of having variable length representations without storing the length separately. The term for this data representation is a prefix code and there are several ways to generate them.

So if we have some data with a non uniform distribution of probabilities we need a way the data be encoded with fewer bits for the common values and more bits for the rarer values. To be efficient we would need some way of having variable length representations without storing the length separately. The term for this data representation is a prefix code and there are several ways to generate them.

Such is the influence of Huffman on the area of prefix codes they are often called Huffman codes even if they were not created using his algorithm. One can dream of becoming immortalised like this, to join the ranks of those whose names are given to units or whole ideas in a field must be immensely rewarding, however given Huffman invented his algorithm and proved it to be optimal to answer a question on a term paper in his early twenties I fear I may already be a bit too late.

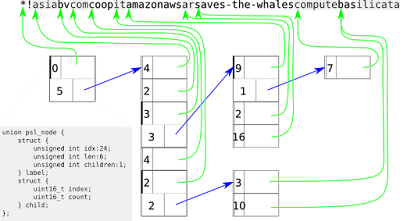

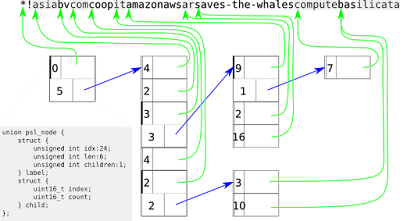

The algorithm itself is relatively straightforward. First a frequency analysis is performed, a fancy way of saying count how many of each character is in the input data. Next a binary tree is created by using a priority queue initialised with the nodes sorted by frequency.

The two least frequent items count is summed together and a node placed in the tree with the two original entries as child nodes. This step is repeated until a single node exists with a count value equal to the length of the input.

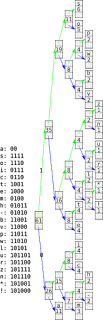

To encode data once simply walks the tree outputting a 0 for a left node or 1 for right node until reaching the original value. This generates a mapping of values to bit output, the input is then simply converted value by value to the bit output. To decode the data the data is used bit by bit to walk the tree to arrive at values.

If we perform this algorithm on the example string table *!asiabvcomcoopitamazonawsarsaves-the-whalescomputebasilicata we can reduce the 488 bits (61 * 8 bit characters) to 282 bits or 40% reduction. Obviously in a real application the huffman tree would need to be stored which would probably exceed this saving but for larger data sets it is probable this technique would yield excellent results on this kind of data.

Once I proved this to myself I implemented the encoder within the existing conversion program. Although my perl encoder is not very efficient it can process the entire PSL string table (around six thousand labels using 40KB or so) in less than a second, so unless the table grows massively an inelegant approach will suffice.

The resulting bits were packed into 32bit values to improve decode performance (most systems prefer to deal with larger memory fetches less frequently) and resulted in 18KB of output or 47% of the original size. This is a great improvement in size and means the statically linked test program is now 59KB and is actually smaller than the gzipped source data.

To be clear the statically linked program can determine if a domain is in the PSL with no additional heap allocations and includes the entire PSL ordered tree, the domain label string table and the huffman decode table to read it.

An unexpected side effect is that because the decode loop is small it sits in the processor cache. This appears to cause the string comparison function huffcasecmp() (which is not locale dependant because we know the data will be limited ASCII) performance to be close to using strcasecmp() indeed on ARM32 systems there is a very modest improvement in performance.

I think this is as much work as I am willing to put into this library but I am pleased to have achieved a result which is on par with the best of breed (libpsl still has a data representation 20KB smaller than libnspsl but requires additional libraries for additional functionality) and I got to (re)learn an important algorithm too.

I had asked for suggestions on reducing the size of the library further and Enrico simply suggested Huffman coding. This was a technique I had learned about long ago in connection with data compression and the intervening years had made all the details fuzzy which explains why it had not immediately sprung to mind.

Huffman coding named for David A. Huffman is an algorithm that enables a representation of data which is very efficient. In a normal array of characters every character takes the same eight bits to represent which is the best we can do when any of the 256 values possible is equally likely. If your data is not evenly distributed this is not the case for example if the data was english text then the value is fifteen times more likely to be that for e than k.

Huffman coding named for David A. Huffman is an algorithm that enables a representation of data which is very efficient. In a normal array of characters every character takes the same eight bits to represent which is the best we can do when any of the 256 values possible is equally likely. If your data is not evenly distributed this is not the case for example if the data was english text then the value is fifteen times more likely to be that for e than k.Such is the influence of Huffman on the area of prefix codes they are often called Huffman codes even if they were not created using his algorithm. One can dream of becoming immortalised like this, to join the ranks of those whose names are given to units or whole ideas in a field must be immensely rewarding, however given Huffman invented his algorithm and proved it to be optimal to answer a question on a term paper in his early twenties I fear I may already be a bit too late.

The algorithm itself is relatively straightforward. First a frequency analysis is performed, a fancy way of saying count how many of each character is in the input data. Next a binary tree is created by using a priority queue initialised with the nodes sorted by frequency.

The two least frequent items count is summed together and a node placed in the tree with the two original entries as child nodes. This step is repeated until a single node exists with a count value equal to the length of the input.

To encode data once simply walks the tree outputting a 0 for a left node or 1 for right node until reaching the original value. This generates a mapping of values to bit output, the input is then simply converted value by value to the bit output. To decode the data the data is used bit by bit to walk the tree to arrive at values.

If we perform this algorithm on the example string table *!asiabvcomcoopitamazonawsarsaves-the-whalescomputebasilicata we can reduce the 488 bits (61 * 8 bit characters) to 282 bits or 40% reduction. Obviously in a real application the huffman tree would need to be stored which would probably exceed this saving but for larger data sets it is probable this technique would yield excellent results on this kind of data.

Once I proved this to myself I implemented the encoder within the existing conversion program. Although my perl encoder is not very efficient it can process the entire PSL string table (around six thousand labels using 40KB or so) in less than a second, so unless the table grows massively an inelegant approach will suffice.

The resulting bits were packed into 32bit values to improve decode performance (most systems prefer to deal with larger memory fetches less frequently) and resulted in 18KB of output or 47% of the original size. This is a great improvement in size and means the statically linked test program is now 59KB and is actually smaller than the gzipped source data.

$ ls -alh test_nspsl

-rwxr-xr-x 1 vince vince 59K Sep 25 23:58 test_nspsl

$ ls -al public_suffix_list.dat.gz

-rw-r--r-- 1 vince vince 62K Sep 1 08:52 public_suffix_list.dat.gz

To be clear the statically linked program can determine if a domain is in the PSL with no additional heap allocations and includes the entire PSL ordered tree, the domain label string table and the huffman decode table to read it.

An unexpected side effect is that because the decode loop is small it sits in the processor cache. This appears to cause the string comparison function huffcasecmp() (which is not locale dependant because we know the data will be limited ASCII) performance to be close to using strcasecmp() indeed on ARM32 systems there is a very modest improvement in performance.

I think this is as much work as I am willing to put into this library but I am pleased to have achieved a result which is on par with the best of breed (libpsl still has a data representation 20KB smaller than libnspsl but requires additional libraries for additional functionality) and I got to (re)learn an important algorithm too.

If that wasn't all bad enough, now there is a new threat: while you are relaxing in the sun, scammers fool your phone company into issuing a replacement SIM card or transferring your mobile number to a new provider and then proceed to use it to take over all your email, social media, Paypal and bank accounts. The same scam has been appearing around the globe, from

If that wasn't all bad enough, now there is a new threat: while you are relaxing in the sun, scammers fool your phone company into issuing a replacement SIM card or transferring your mobile number to a new provider and then proceed to use it to take over all your email, social media, Paypal and bank accounts. The same scam has been appearing around the globe, from  For phone companies, SMS messaging came as a side-effect of digital communications for mobile handsets. It is less than one percent of their business. SMS authentication is less than one percent of that. Phone companies lose little or nothing when SMS messages are hijacked so there is little incentive for them to secure it. Nonetheless, like insects riding on an elephant, numerous companies have popped up with a business model that involves linking websites to the wholesale telephone network and dressing it up as a "security" solution. These companies are able to make eye-watering profits by "purchasing" text messages for $0.01 and selling them for $0.02 (one hundred percent gross profit), but they also have nothing to lose when SIM cards are hijacked and therefore minimal incentive to take any responsibility.

Companies like Google, Facebook and Twitter have thrown more fuel on the fire by encouraging and sometimes even demanding users provide mobile phone numbers to "prove they are human" or "protect" their accounts. Through these antics, these high profile companies have given a vast percentage of the population a false sense of confidence in codes delivered by mobile phone, yet the real motivation for these companies does not appear to be security at all: they have worked out that the mobile phone number is the holy grail in cross-referencing vast databases of users and customers from different sources for all sorts of creepy purposes. As most of their services don't involve any financial activity, they have little to lose if accounts are compromised and everything to gain by accurately gathering mobile phone numbers from as many users as possible.

For phone companies, SMS messaging came as a side-effect of digital communications for mobile handsets. It is less than one percent of their business. SMS authentication is less than one percent of that. Phone companies lose little or nothing when SMS messages are hijacked so there is little incentive for them to secure it. Nonetheless, like insects riding on an elephant, numerous companies have popped up with a business model that involves linking websites to the wholesale telephone network and dressing it up as a "security" solution. These companies are able to make eye-watering profits by "purchasing" text messages for $0.01 and selling them for $0.02 (one hundred percent gross profit), but they also have nothing to lose when SIM cards are hijacked and therefore minimal incentive to take any responsibility.

Companies like Google, Facebook and Twitter have thrown more fuel on the fire by encouraging and sometimes even demanding users provide mobile phone numbers to "prove they are human" or "protect" their accounts. Through these antics, these high profile companies have given a vast percentage of the population a false sense of confidence in codes delivered by mobile phone, yet the real motivation for these companies does not appear to be security at all: they have worked out that the mobile phone number is the holy grail in cross-referencing vast databases of users and customers from different sources for all sorts of creepy purposes. As most of their services don't involve any financial activity, they have little to lose if accounts are compromised and everything to gain by accurately gathering mobile phone numbers from as many users as possible.

This recent book of the Austrian author

This recent book of the Austrian author  Michael K hlmeier s recently released book Zwei Herren am Strand tells the fictive story of Charlie Chaplin and Winston Churchill meeting and becoming friends, helping each other fighting depression and suicide thoughts. Based on a bunch of (fictive) letters of a (fictive) private secretary of Churchill, as well as (fictive) book on Chaplin, the first person narrator dives into the interesting time of the mid-20ies to about the Second World War.

Michael K hlmeier s recently released book Zwei Herren am Strand tells the fictive story of Charlie Chaplin and Winston Churchill meeting and becoming friends, helping each other fighting depression and suicide thoughts. Based on a bunch of (fictive) letters of a (fictive) private secretary of Churchill, as well as (fictive) book on Chaplin, the first person narrator dives into the interesting time of the mid-20ies to about the Second World War.

Chaplin is having a hard time after the divorce from his wife Rita, paired with the difficulties at the production of

Chaplin is having a hard time after the divorce from his wife Rita, paired with the difficulties at the production of

Last week we lost another great musician, song writer, artist. It's painful to realise that more and more of the people you grew up with aren't there anymore. We lost

Last week we lost another great musician, song writer, artist. It's painful to realise that more and more of the people you grew up with aren't there anymore. We lost

Today was my last day at

Today was my last day at